Biography

Minghui (Scott) Zhao is a fourth-year Ph.D. candidate at Columbia University’s Intelligent and Connected Systems Lab (ICSL), supervised by Prof. Xiaofan (Fred) Jiang. His research focuses on developing embodied and embedded AI systems that enable intelligent agents to perceive, understand, and act in the physical world. Through hardware-software co-design and physics-informed machine learning, he develops novel sensing techniques, intelligent decision-making frameworks, and accessible hardware platforms that perform robustly in real-world, resource-constrained settings. These systems enable new capabilities in autonomous inspection and indoor logistics, home-based and community health monitoring that support aging and remote care, and intelligent homes and buildings, paving the way for AI that actively participates in and improves our daily lives. Before his Ph.D., Scott earned his B.S. in Electrical Engineering from UC San Diego and M.S. in Computer Engineering at Columbia University.

- Embedded Systems + AI

- Mobile and Ubiquitous Computing

- Physical and Embodied AI

PhD in Electrical Engineering, 2026

Columbia University

MS in Computer Engineering, 2022

Columbia University

BS in Electrical Engineering, 2020

University of California San Diego (UCSD)

Experience

Embodied and Embedded AI Systems Research

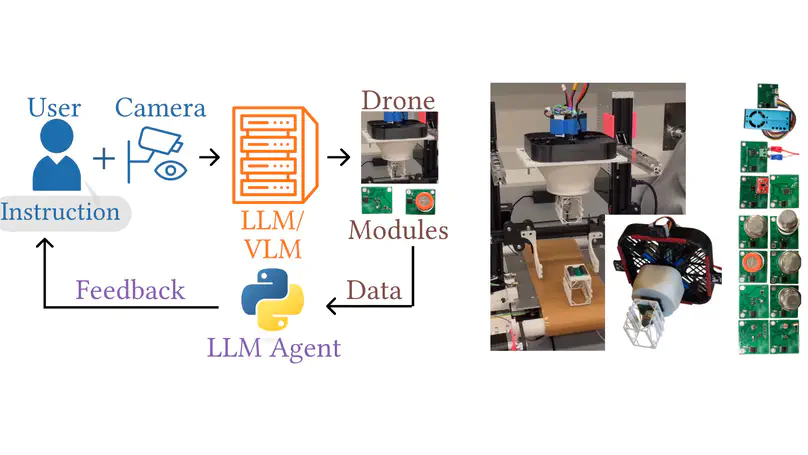

- Developed FlexiFly: Embodied LLM agent with reconfigurable drone platform (SenSys'25, TIoT'25)

- Created Anemoi: Sensorless 3D airflow mapping system (MobiCom'23)

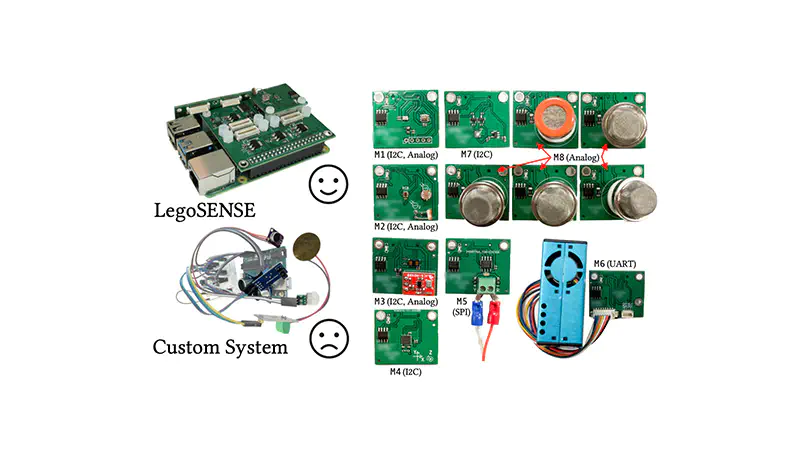

- Built accessible IoT platforms and programless smart home systems (IoTDI'23, HumanSys'25)

- Developed acoustic-based mobile health systems for running analytics (IMWUT'25, SenSys'25)

Wearable Voice Interface for Agentic LLM

- Designed and prototyped a voice-controlled wearable ring for agentic LLM task execution

- Developed end-to-end system architecture including mobile app and BLE firmware

- Integrated with VS Code Copilot and internal agentic frameworks using LangGraph

- Led user study lifecycle and presented demos to company leadership

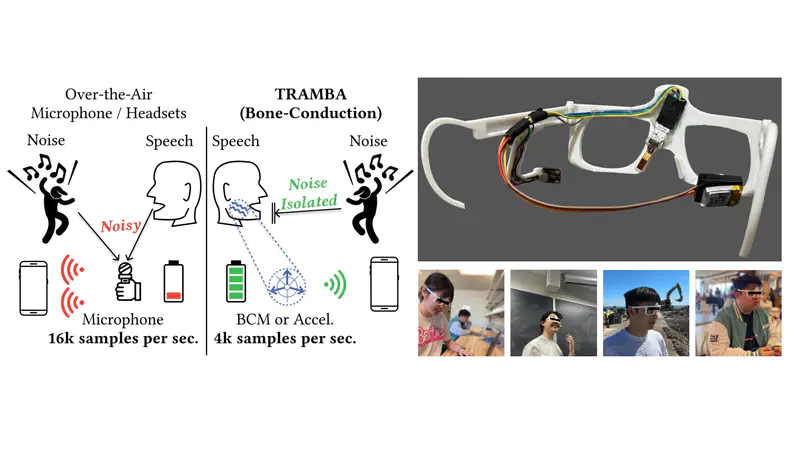

Wearable Low-Power Speech Enhancement Platform (TRAMBA)

- Developed novel speech enhancement for bone-conduction microphones and accelerometers

- Created hybrid transformer-Mamba architecture for resource-constrained wearables

- Achieved 160% battery life improvement with 75% WER reduction in noisy environments

- First author paper in ACM IMWUT'24

Light Stage System for 3D Reconstruction

- Built light stage with 800+ wirelessly controlled high-power LEDs

- Designed modular PCBs and developed C firmware with ESP32

- Created automation software interfacing light systems with industrial cameras

UWB Localization and Wearable Systems

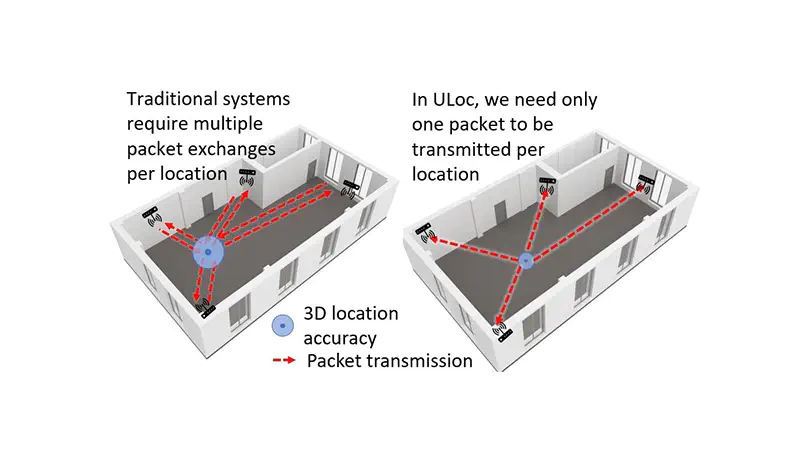

- Designed ULoc: Centimeter-accurate scalable 3D UWB tag localization system (IMWUT'21)

- Developed embedded firmware and hardware for UWB transceiver arrays

- Achieved 3.6 cm stationary accuracy and 10 cm tracking accuracy

Featured Publications

An embodied AI system combining foundation models with a custom drone platform that can autonomously reconfigure its sensors and actuators to accomplish diverse physical tasks.

A hybrid transformer-Mamba architecture for wearable speech enhancement using bone conduction sensors, achieving superior quality with 160% battery life improvement and 465x faster inference.

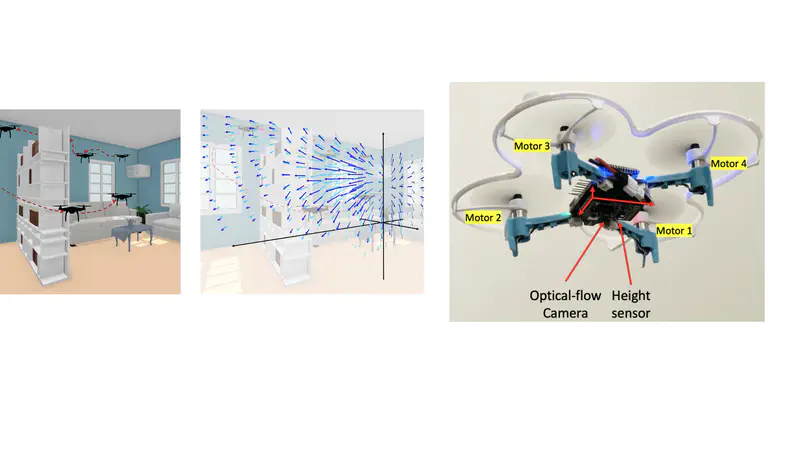

Anemoi’s sub-$100 drone-based system autonomously maps 3D indoor airflow fields without the need for any external sensor, leveraging airflow effects on motor control signals and outperforming existing methods with significantly reduced errors in wind speed and direction estimation.